Some points to ponder:

-

“North American businesses lose $26.5 Billion annually from avoidable downtime” – CA Technologies

-

“Enterprises that systematically manage the lifecycle of their IT assets will reduce costs by as much as 30% during the first year, and between 5 and 10% annually during the next five years.” – Patricia Adams, Gartner IT Asset Management and TCO Summit

Data center inefficiency affects all levels of the corporate hierarchy; from IT asset managers and data center directors, to accounting and finance professionals, right up to the folks sitting in the boardroom. The combination of skyrocketing energy costs and the ever-increasing business demand for data and computing power have made it clear that improved data center asset management and power and cooling monitoring technologies aren’t just “nice to have”: they are vitally important solutions that are necessary for today’s businesses to thrive–or even survive.

And yet adoption of automated asset management and real-time environmental monitoring technologies and DCIM platforms has proven to be a slow process. While the escalating costs associated with inefficiency are clearly understood and the dangers of downtime associated with lack of data center visibility and intelligence is all too apparent, it often takes organizations months–some even years–to choose a solution, test it, and finally deploy it.

And yet adoption of automated asset management and real-time environmental monitoring technologies and DCIM platforms has proven to be a slow process. While the escalating costs associated with inefficiency are clearly understood and the dangers of downtime associated with lack of data center visibility and intelligence is all too apparent, it often takes organizations months–some even years–to choose a solution, test it, and finally deploy it.

Which begs the question: What are you waiting for?

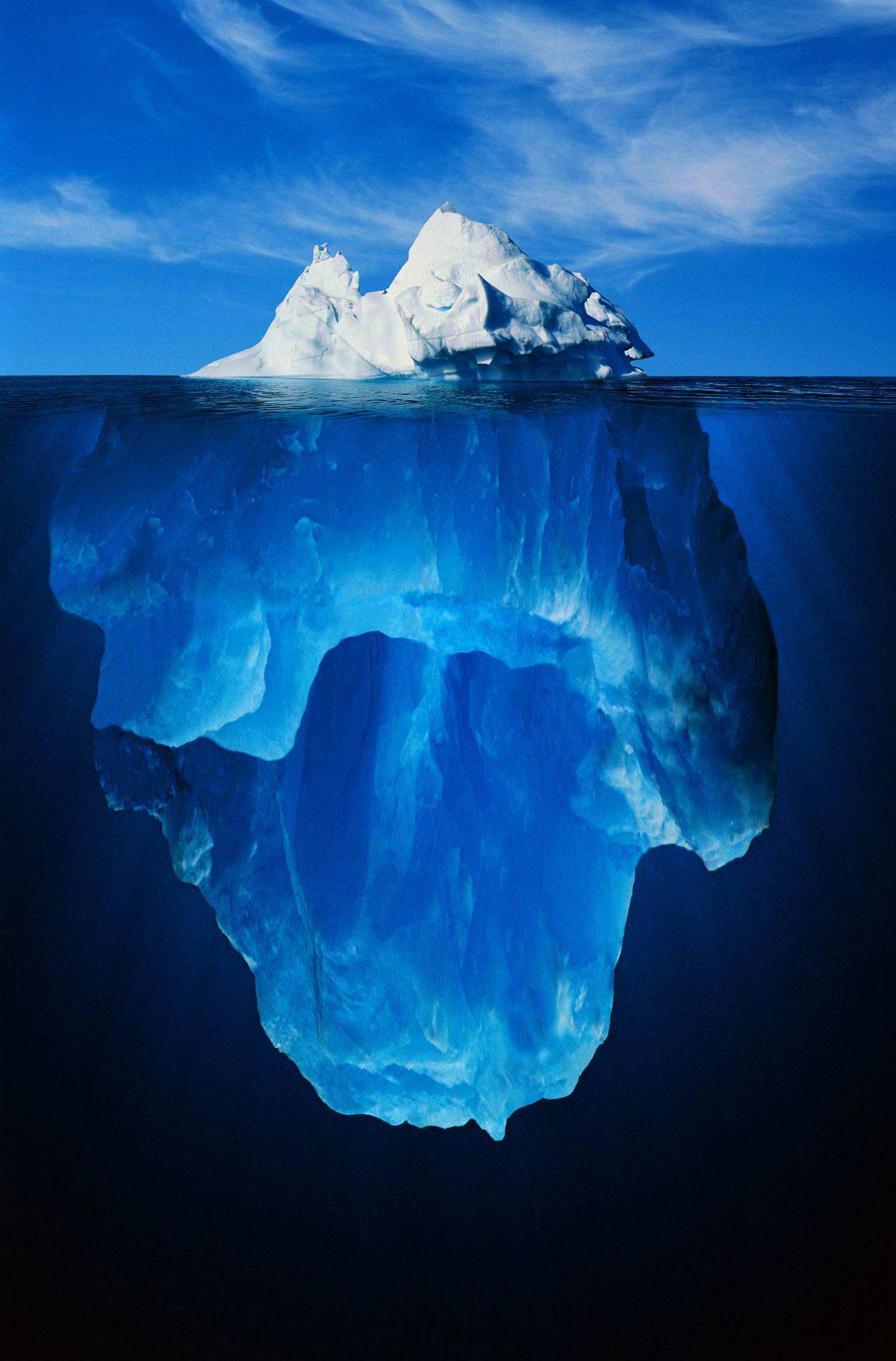

You know that every day without up-to-date asset management data is another day that your capacity planning and management decision-making abilities are limited. Every day without accurate, real-time environmental monitoring is another day of inefficient, wasteful power and cooling policies. Every day without reliable data center visibility is another day of increased risk.

And yet we’ve found that many of our customers didn’t finally commit to full deployment until after they suffered a data center or business disaster. For some it was the creeping realization that writing off 15-20% of their physical data center assets each year because they simply couldn’t find them was crippling their ability to plan capacity and costing their organization millions of dollars annually. For others it was catastrophic losses caused by a crippling downtime event that could have been easily prevented with some temperature, humidity, and leak detection sensors. And for still others it was staggeringly high recurrent operating expenses coupled with a growing awareness of the environmental impact of data center power and cooling inefficiency.

All of these customers had one unfortunate thing in common: they all wished they’d deployed a comprehensive data center efficiency solution sooner. But despite clearly understanding the risks of operating their data centers in a “business as usual” mode, it took an event that transformed well-perceived financial risk into real-world financial loss for them to commit to modernizing and changing their policies.

So, what are the factors that are holding you back? Is it budget? Process re-engineering? The prospect of educating and training staff on new tools? Maybe you’re just having trouble choosing between the many options that are available in the marketplace. Or is it convincing “The Powers That Be” that business-as-usual is not necessarily the best road forward?

And most importantly: Will it take a data center disaster to overcome these obstacles? Let us know your thoughts and opinions in the comments below.